Picture the scene – your project has been running for a few months, and each team has been building their features and testing them; the website team have been proudly showing off the gorgeous new site; the database team have been fine-tuning for performance; the ERP team have added new features to maintain the new stock items; the CRM team have added attributes to capture the new customer data; and the integration team have been building their interfaces. Everyone has been working from an overarching high-level design, and from interface specifications so that the website guys can work independently of the integration guys, who can be independent of the CRM guys. This is good practice for managing the project, whether it’s a small enhancement or a massive transformation; whether it’s being delivered waterfall or agile [1].

Now comes the time for System Integration Testing – often known as SIT, sometimes called End-to-End Testing, sometimes just Integration Testing. Whatever you call it, this is when all the teams come together and connect all the pieces of the project together and start testing how the transactions flow through the whole thing. Any defects you find now are orders of magnitude more expensive to fix than if they’re found earlier. Worst case scenario you can have multiple teams burning weeks of time waiting for a fix to be implemented and deployed.

Let’s say the CRM team accidentally built their Web Service to an earlier version of the spec document and now customer address updates are not getting through. You may have several teams investigating the issue to work out that this is what happened; a few meetings of finger pointing to work out who was using the ‘right’ version; The test team must shelve a bunch of test cases and move onto some that they haven’t fully defined yet, introducing risk; the CRM team need to rebuild their Web Service; you might also decide that the Integration team need to modify the interface to handle the error more gracefully next time. That all adds up to a lot of extra effort and can involve delays to the testing schedule and possibly the whole project.

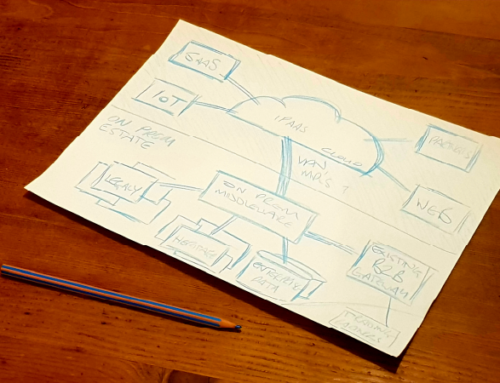

Unfortunately, this is a scenario that happens all too often. Integration can be complex. The technology is built in layers upon layers, both conceptually and physically. The different protocols and data types involved need to be translated. Data needs to be temporarily stored, transactions need to be consistent. But more than the technical aspects, complexity comes from the fact that people need to communicate effectively over the course of what is often months, and multiple teams need to interlock on changing designs, specifications and delivery schedules. So, defects do happen during SIT. But often these defects can be avoided.

Why do these costly defects happen? Well, it pains me to see that so often still today, interfaces are not being tested properly. There’s no doubt that testing an interface is difficult: If you’re testing a website, or something with a user interface, the job of understanding the requirements and writing test scripts can be done by people that understand what the end result should look like, rather than how it’s been built, and the test scripts can be run by anyone who can follow the script carefully and report the results clearly. Testing an interface is different. You need to understand more about the different behaviours that the interface has implemented. How does it deal with errors? Is it asynchronous? What input protocol does it use? If it’s receiving a Web Service call, how does a tester initiate that? If it’s calling a Web Service, how do I not end up testing that Web Service too? All too often the developers are left to do the best job they can with testing the interface, and often even the developers are unable to sufficiently drive the behaviour of the interface.

Avoiding these issues is simple, and the knowledge has been around for a long time now: We must use test harnesses to send appropriate inputs into the interface, and mock endpoints to mimic the behaviour of the outputs of the interface. There are some really simple ways to do this, such as using popular interface testing tool SOAPUI, and there are some really sophisticated ways to do this such as using IBM Service Virtualisation or CA Service Virtualisation [2]. However, you can achieve great results with open source tools like WireMock. But tools aren’t the whole story – we need testers who are specialised in testing integration, this is well suited to developers who have a penchant for pedantry and really like to find bugs, but there are plenty of testers that can turn their hand to this, especially if they’ve been used to writing sophisticated UI automation scripts. The best approach though is to make each developer responsible for building their test cases, and follow a Test Driven Development or a Behaviour Driven Development methodology, where quality assurance is built into the development process at first principles.

At Wheeve, we believe in establishing a continuous integration pipeline, with automated build, test and deployment stitched seamlessly together. This is an essential tool for ensuring the delivery of high-quality code, on time and to budget. I’m still amazed when development teams don’t use CI to automate the mundane tasks, and just dive into writing code without establishing the hygiene factors first.

Whether you go full-on CI with harnesses and mocks, or whether you just help your developers to consider all the interface usage scenarios and ensure they have unit tested all of them, just make sure that you test your interfaces thoroughly before you get to System Integration Testing if you want to avoid costly defects.

Or better still, why not talk to us at Wheeve! We have utilised sophisticated integration testing using a range of tools in our client projects, and we have helped organisations like yours to establish high-performing integration teams that use proven processes and simple yet rigorous methods combined with state of the art technology and tools to deliver high-quality solutions for projects and programmes.

Footnotes:

[1] The agile gurus amongst you will probably suggest feature teams are a better way to go for an agile project, but how we use model stories for integration developers and how we organise developers against these stories is the subject of a whole different article!

[2] The two best tools were for a long time ITKO LISA and Green Hat Tester. Over the last 5 to 10 years IBM have bought Green Hat and renamed it IBM Service Virtualisation, and CA bought ITKO LISA and renamed it CA Service Virtualisation.